As we have seen above as mathematical models progress, they leave the realm of interpretability and enter the dimension of explainability.

Simple linear regressions or decision trees are examples of interpretable models while nowadays used more sophisticated models like convolutional neural networks or random forest models are considered black box models. These black box models need special techniques to explain how the results or outcomes or predictions have been formed.

These techniques can be either grouped by locality or specifity. Locality means that some techniques focus on the result of a specific input (e.g. to prove if a model is biased towards gender) or on the global formula itself. Specifity means that some models only work for certain groups of models (like regressions or convolutional neural networks) and other techniques are so called “model-agnostic”.

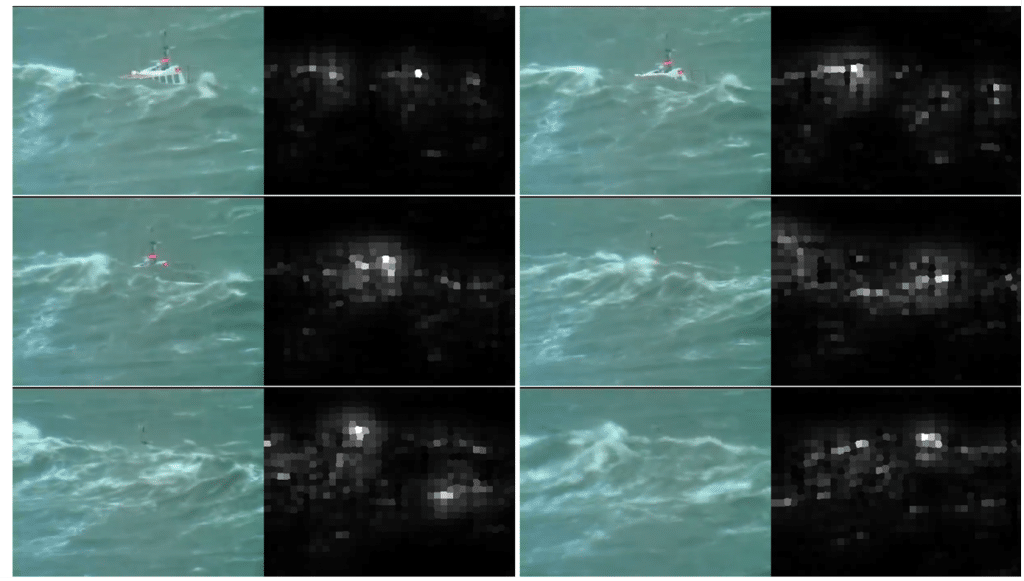

As we talked about convolutional neural networks (CNN), I would like to point your attention to the oldest method of explaining a CNN: saliency maps. Saliency maps basically re-engineer the flow of data from back to front and show which feature each layer in the network is using. Below you are seeing an example of a saliency map. As you see, the observer can determine, which feature of the picture is being used by the model to make its decisions.

Guosiyuan 123, CC BY-SA 4.0 via Wikimedia Commons

To be very honest, explainability of more and more complex neural models with millions and soon billions of neurons is not an easy task. And it will take years, if not decades when we can truly explain with a very high degree of certainty why the model is behaving the way it does. This will and should make us feel a bit uncomfortable as we feel to lose more and more control over the “reasoning” of our machines. If you look at the first principle of human autonomy or the principle of prevention of harm: Are we really able to grant autonomy, prevent harm and promise fairness, if we can’t explain the reasoning of our machines? I hope that the development of explaining techniques will have the same speed and attention as the development of bigger and bigger neural networks.

In the next chapter will start looking into how we can realize Trustworthy A.I. using the previously established four ethical principles.